Opnsense 100% CPU utilization due to rand_harvest

A workaround for high CPU utilization (99-100%) when running Opnsense in Hetzner Cloud or other KVM environments caused by rand_harvestq.

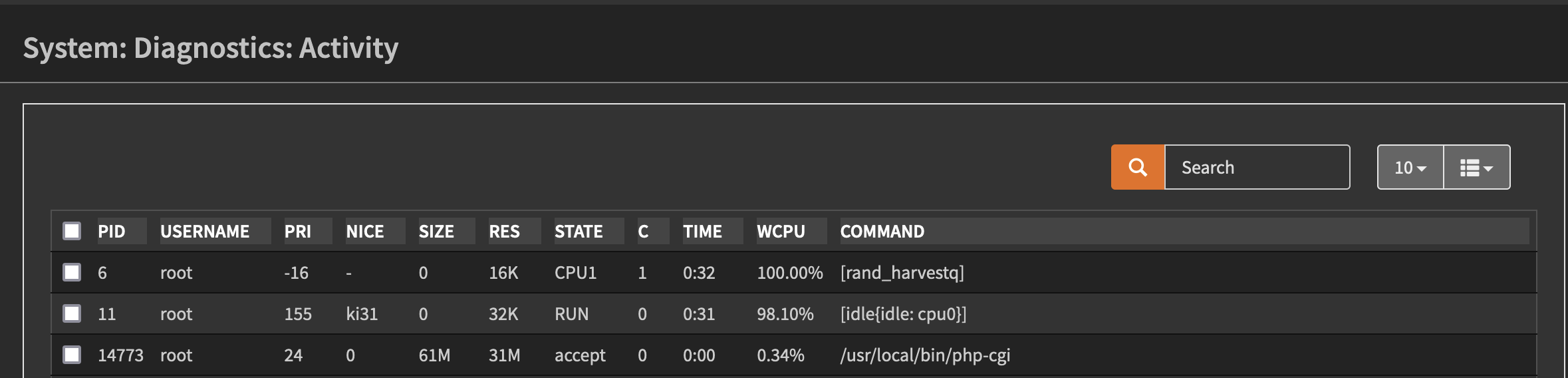

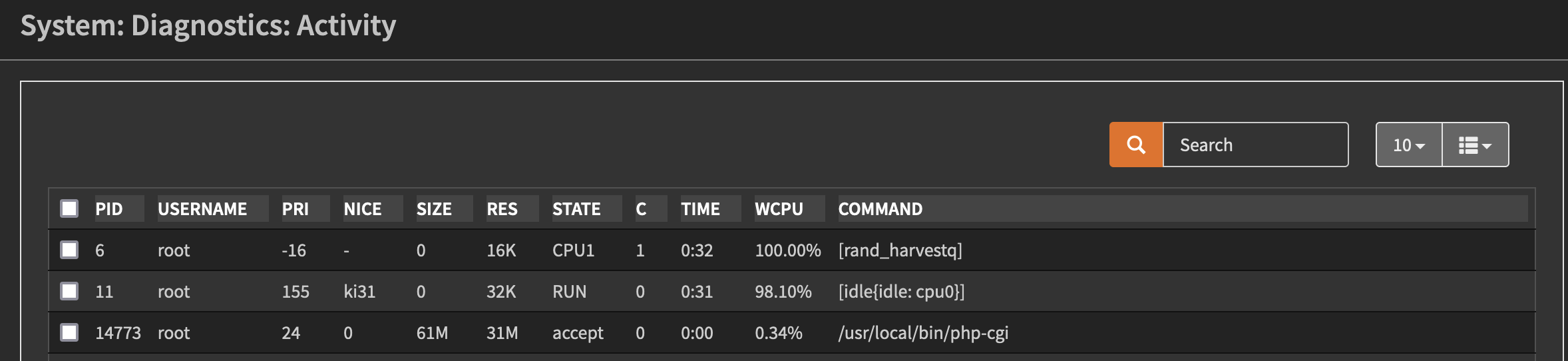

Running Opnsense on Hetzner Cloud lead to an issue where the instance was consistently at 99-100% CPU utilization, impacting the ability to perform any operations. This was caused by a process for random number generation or entropy called rand_harvest or rand_harvestq.

This process uses a kernel module called virtio_random.ko on hosts running on KVM as the hypervisor - such as Hetzner Cloud.

The full-tilt CPU utilization was breaking the ability to modify Wireguard configurations without rebooting the host each time I needed to restart the service for a given Wireguard instance.

If you have access to the underlying KVM hypervisor, you may be able to add a VirtIO Random Number Generator to your VM to solve this. If this is a cloud environment out of your control, see the below workaround.

Workaround:

This workaround simply moves the kernel module called virtio_random.ko to a new directory so that it does not load upon boot.

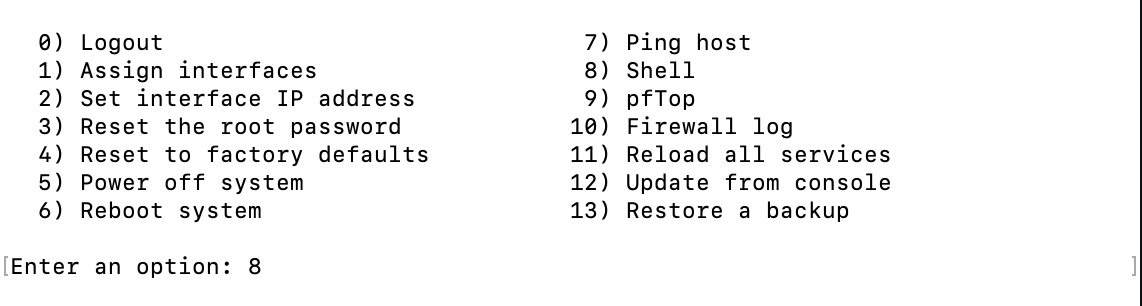

SSH into the Opnsense instance - select option 8 for shell:

Move the virtio_random.ko kernel module:

You'll create a directory for the disabled kernel module to reside in, then move it.

mkdir /boot/disabled/ && mv /boot/kernel/virtio_random.ko /boot/disabled/

Check that the kernel module was moved:

ls cd /boot/disabled/

virtio_random.koYou should see virtio_random.ko located here.

Once confirmed, reboot:

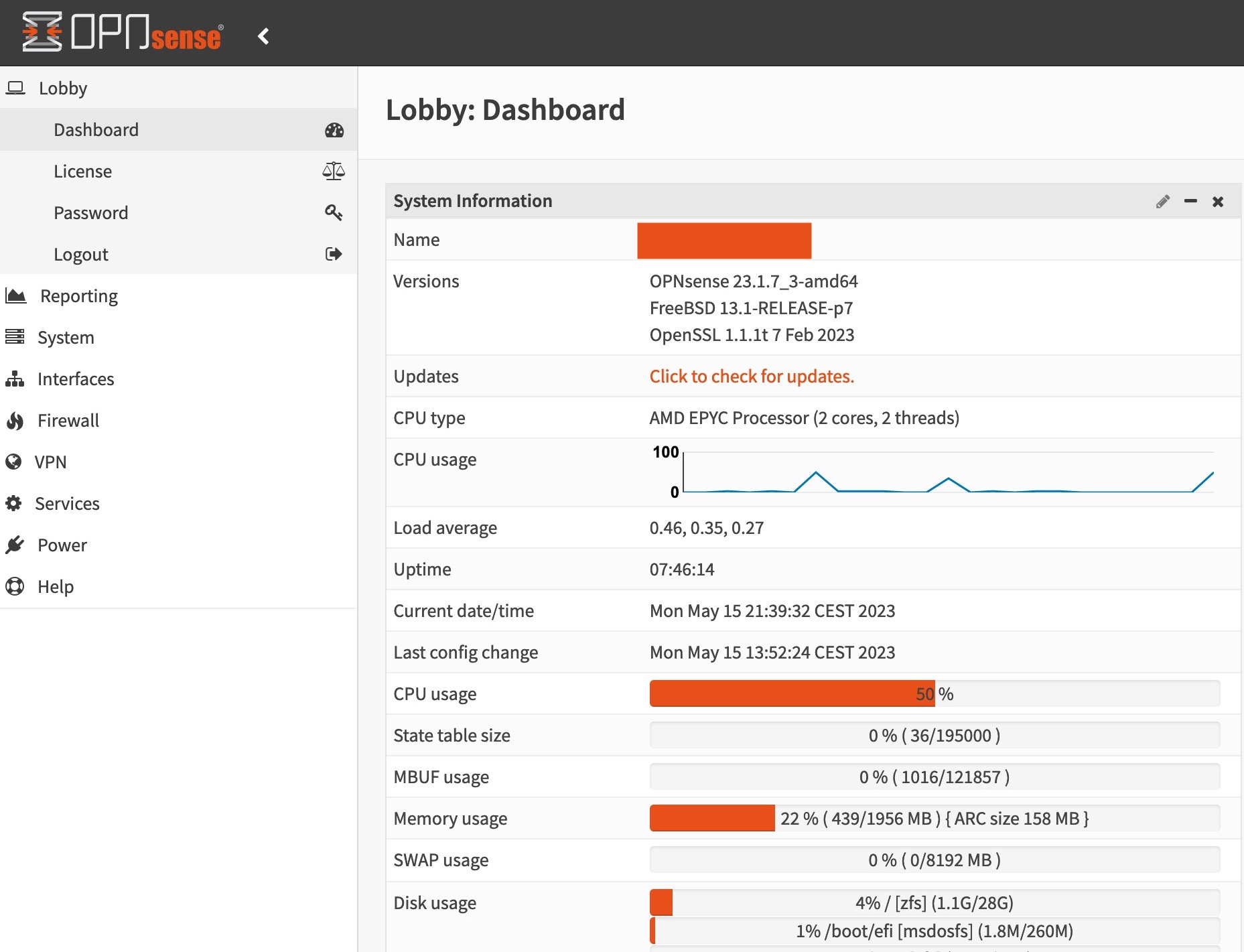

rebootBefore reboot:

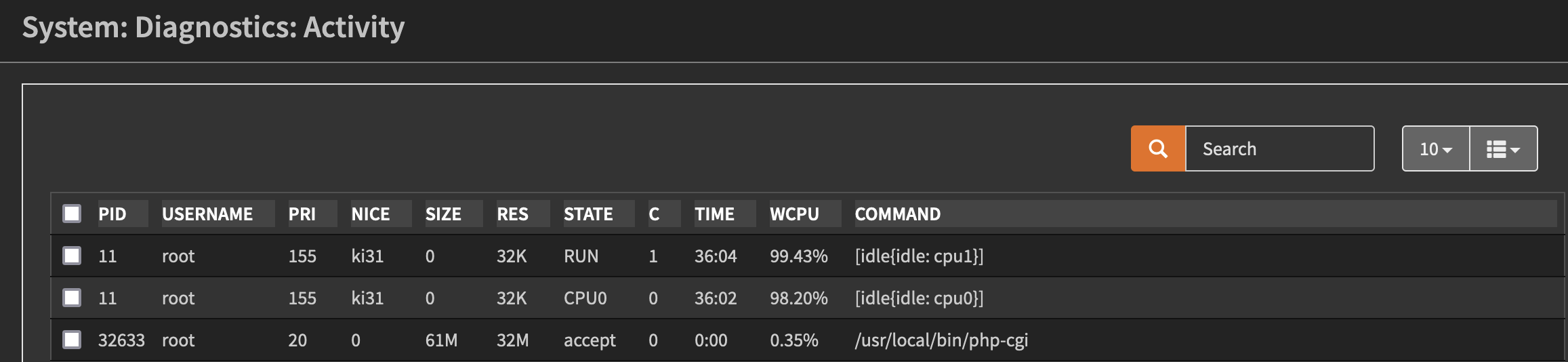

After reboot:

Implications

I'm not 100% sure of the implications of disabling this module, but this workaround does solve the CPU utilization problem which was bringing Opnsense to a crawl.

This may or may not persist Opnsense software version upgrades.

Source of this workaround:

This source is in German, it can be translated, but, I'm also writing out the process in English to make it easier. It was somewhat hard to find this when performing searches in English.

FreeBSD Issue Tracker:

It does appear this issue is logged, but, has no current actual resolution.